As early as 2016, when NVIDIA CEO Jensen Huang personally delivered the company’s first AI processors to OpenAI’s Sam Altman and Elon Musk, no one could have anticipated the profound impact it would have on the AI revolution later sparked by ChatGPT. At that time, NVIDIA’s GPUs were primarily associated with gaming consoles and cryptocurrency mining, with much less connection to machine learning.

Soon after, most chip manufacturers recognized the importance of integrating AI directly into their chips. As AI applications rapidly emerged, demand and valuations for the new generation of GPUs skyrocketed.

Qualcomm announced its AI-enabled Snapdragon chips, capable of running on smartphones and PCs. Developed by researchers at the University of California, San Diego, the NeuRRAM chip performs AI computations directly in memory, reducing energy consumption while supporting a range of AI applications such as image recognition and reconstruction.

What is an AI Chip?

An AI chip is a specialized computing chip designed to accelerate AI algorithms. Unlike traditional general-purpose chips (such as CPUs and GPUs), AI chips are optimized at the hardware level for specific AI tasks. These tasks include deep learning model training, inference, data analysis, and more, which require extensive matrix operations and parallel processing. Traditional chip architectures often struggle to meet these demands, so AI chips are designed with architectures suited for neural network computations, significantly enhancing computational efficiency.

The main types of AI chips include:

- GPU (Graphics Processing Unit):Originally used for graphics rendering, GPUs are now widely used for AI tasks, especially deep learning training.

- TPU (Tensor Processing Unit):A chip designed by Google specifically for AI computation, primarily to accelerate tensor calculations.

- FPGA (Field-Programmable Gate Array):A programmable chip suitable for the flexible requirements of different AI applications.

- ASIC (Application-Specific Integrated Circuit):A custom chip tailored for specific AI tasks, offering the highest performance and efficiency, though it comes with a high design cost, making it suitable for large-scale deployment.

The Importance of AI Chips for AI Development

The emergence of AI chips has not only solved the efficiency challenges of AI computation but has also pushed the boundaries of AI technology. Their importance is reflected in several key areas:

Enhancing Computational Power: Through parallelism and specialized design, AI chips greatly accelerate the training and inference speed of AI models, making it feasible to compute complex, large-scale models like GPT-4 and AlphaFold. The high performance of AI chips enables faster iteration of complex models and algorithms, driving continuous breakthroughs in AI technology.

Optimizing Energy Efficiency: Running AI models in traditional computing environments often faces high power consumption issues. AI chips, with their specialized architecture, reduce unnecessary computing operations, improving the energy efficiency ratio, which is particularly important for battery-powered applications like mobile devices and autonomous vehicles.

Accelerating Inference Deployment: The application of AI chips in edge devices allows AI inference tasks to run directly on local devices (such as smartphones, drones, and IoT devices), reducing reliance on the cloud. This low-latency, high-real-time deployment approach supports the application of AI in more real-world scenarios, such as real-time translation, intelligent monitoring, and autonomous driving.

Reducing Cost and Size: Compared to traditional chips, AI chips are more efficient and energy-saving when handling AI tasks, which means reduced computing resources and power consumption, thereby lowering the overall operational costs for enterprises. AI chips are often smaller in size, enabling integration into a variety of compact devices, thus facilitating intelligence in small devices like wearables and home smart assistants.

Gartner predicts that global AI chip revenue will nearly double to $119 billion by 2027.

Main Application Areas of AI Chips

The high computational efficiency of AI chips has enabled their application across various fields, driving intelligence across multiple industries:

Autonomous Driving: Self-driving cars need to process massive amounts of sensor data in real-time. AI chips ensure rapid computation for vehicle perception, decision-making, and control, thereby enhancing safety.

Smart Healthcare: AI chips make tasks like medical imaging analysis and gene sequencing faster and more efficient, aiding doctors in quicker diagnosis and advancing personalized medicine.

Smart Manufacturing: Industrial robots, quality monitoring systems, and other processes require precise AI algorithms. AI chips meet these demands, boosting automation and efficiency in manufacturing.

Natural Language Processing and Intelligent Assistants: For tasks like speech recognition, translation, and text generation, AI chips improve processing speed, making virtual assistants and translation devices smarter and more responsive.

AI chips enable electronics manufacturers to easily simulate virtual factories, integrate optics and computer vision into manufacturing and inspection workflows, and allow robots to identify and respond more efficiently. With high-efficiency AI hardware, manufacturers can achieve lower latency and costs in computer-aided design applications and cutting-edge frameworks for generative AI, 3D collaboration, simulation, and autonomous machines on the factory floor.

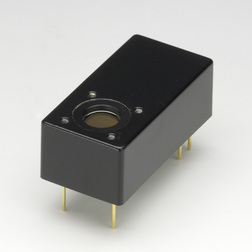

As a critical support for AI development, AI chips significantly impact AI’s computing power, costs, energy efficiency, and application scenarios. At the core of these AI applications are electronic components, such as sensors, actuators, and communication hardware. EMI is dedicated to providing highly reliable electronic components to enhance the robustness of AI applications across various industries.